EU AI Act Explained: What it means for Nonprofits & Associations

Summarize this blog with your favorite AI:

EU AI Act Explained: What it means for Nonprofits & Associations – TL;DR

The EU AI Act is a risk-based law designed to ensure ethical and responsible AI use. Associations handling EU member data must understand compliance requirements, including transparency, data governance, and accountability.

High-risk AI requires strict oversight, while limited-risk AI focuses on transparency. Responsible adoption builds trust, improves engagement, and aligns with nonprofit values.

Platforms like KITABOO make compliance easier by securing content, managing accessibility, and integrating with CRM systems.

| Key Aspect | Summary |

|---|---|

| Law Type | Risk-based regulation for AI use in the EU. |

| Who it Applies To | Any organization using AI that affects EU member data. |

| AI Risk Categories | Unacceptable, High, Limited, Minimal. |

| High-Risk AI | Requires strict oversight, risk assessment, and governance. |

| Limited-Risk AI | Transparency, labeling AI-generated content, and user disclosure. |

| Benefits of Compliance | Builds trust, ethical AI adoption, and enhances member engagement. |

| Role of Platforms | KITABOO ensures secure, accessible, and integrated AI content management. |

Have you ever wondered what happens when AI makes decisions about your members’ lives? For nonprofits and associations, this is no longer a distant question.

The EU AI Act forces organizations to think carefully about AI usage. It highlights the implications for associations handling EU member data. It sets rules to ensure AI operates transparently, ethically, and responsibly.

Your AI tools, whether for member management, communications, or training, could fall under strict scrutiny.

Understanding this law will help you preserve your trust and credibility. Let’s uncover how associations can navigate the EU AI Act while using AI confidently and responsibly.

Table of Contents

- Why Was the EU AI Act Needed?

- What is the EU AI Act?

- How Will the Act Change Things for Nonprofits & Associations?

- What are the Implications for Associations Handling EU Member Data?

- How Can Associations Prepare for Compliance?

- Why Is the EU AI Act an Opportunity, Not Just a Burden?

- What Role Do Digital Publishing Platforms Play in Compliance?

- Conclusion

- FAQs

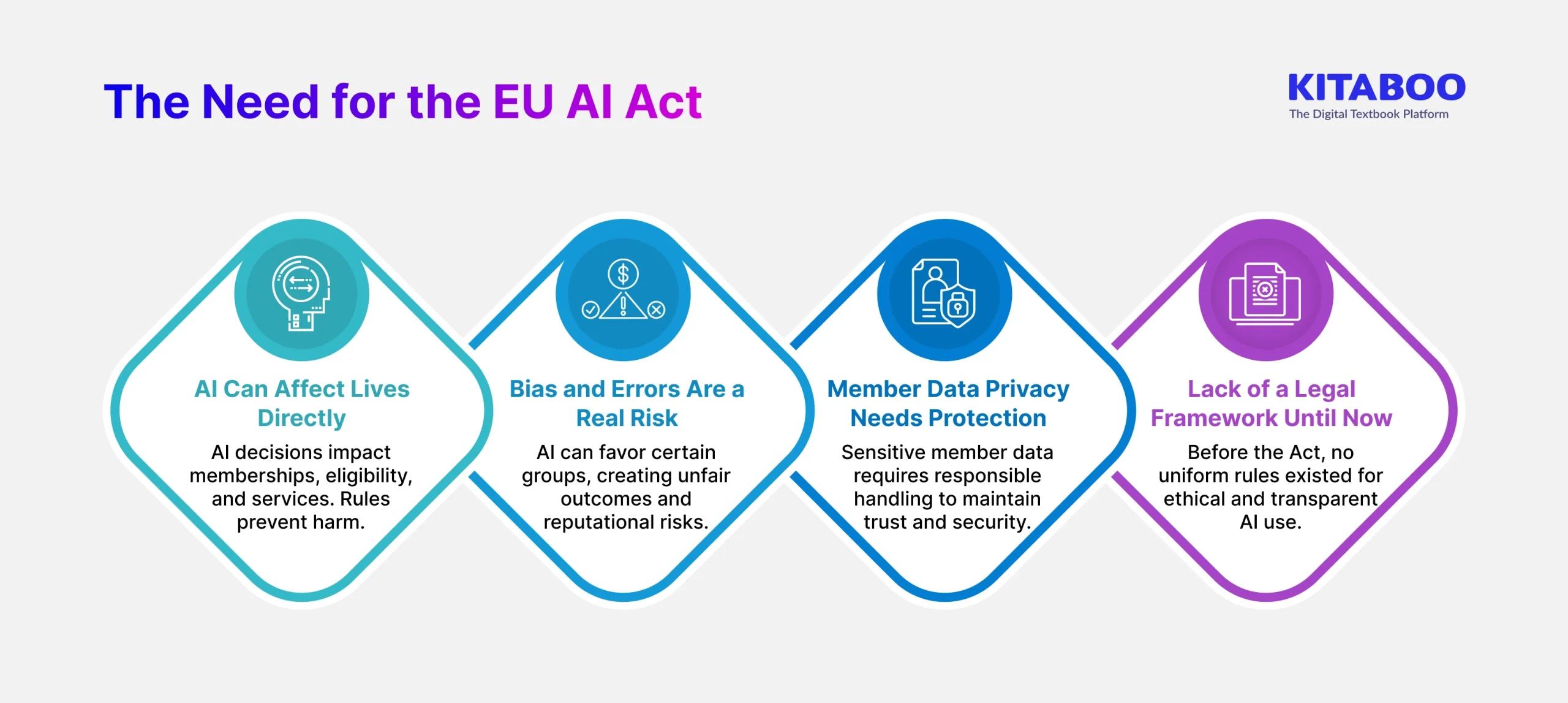

Why Was the EU AI Act Needed?

Artificial intelligence is making decisions that affect people’s lives more than ever. Nonprofits and associations increasingly rely on AI for member data management, communications, and training.

Without clear rules, biases, errors, and privacy breaches can harm members and damage trust. The EU AI Act was created to fill this gap and ensure AI operates ethically.

It’s important to understand the implications for associations handling EU member data to prevent risks. This law is a guide to protecting members and maintaining credibility while using AI effectively.

1. AI Can Affect Lives Directly

AI systems now influence memberships, service eligibility, and organizational decisions. Without rules, these tools could make unfair or harmful decisions for members.

Nonprofits need guidance to ensure AI enhances member engagement without unintended consequences.

2. Bias and Errors Are a Real Risk

AI can unintentionally favor certain groups or profiles over others. This may lead to inequitable treatment and damage an association’s reputation.

The EU AI Act encourages fairness and accountability in all AI-driven processes.

3. Member Data Privacy Needs Protection

Nonprofits handle sensitive member information that AI often processes. Improper handling could compromise privacy and break trust with members.

The Act ensures AI systems manage personal data transparently and responsibly.

4. Lack of a Legal Framework Until Now

Before the Act, no uniform rules existed for AI use in the EU. Organizations faced uncertainty about compliance, accountability, and ethics.

The Act provides clear standards for responsible, transparent, and ethical AI deployment.

What is the EU AI Act?

The EU AI Act is a risk-based law that sets rules for ethical, transparent, and accountable AI use in the European Union. This Act specifically regulates the use of AI systems in the EU, regardless of where the organization is headquartered.

It was proposed in April 2021 and agreed by the European Parliament and the Council in December 2023. It entered into force on August 1, 2024.

The Act is a risk-based law, not a ban, classifying AI systems by potential impact to ensure ethical and responsible use. Knowing the implications for associations handling EU member data helps organizations use AI safely.

The EU AI Act organizes AI systems into four risk categories. Understanding them is key to navigating the implications for associations handling EU member data effectively.

1. Unacceptable Risk

Unacceptable-risk AI is entirely banned because it can harm or manipulate individuals. Associations must avoid using such tools to protect members and trust.

2. High Risk

High-risk AI affects people’s rights or well-being and requires careful oversight. Nonprofits must ensure these systems are used responsibly.

3. Limited Risk

Limited-risk AI, such as chatbots or content tools, only requires transparency. Organizations must inform users and clearly label AI-generated outputs.

4. Minimal Risk

Minimal-risk AI includes general-purpose tools with negligible impact. These tools face few restrictions and are widely used by nonprofits.

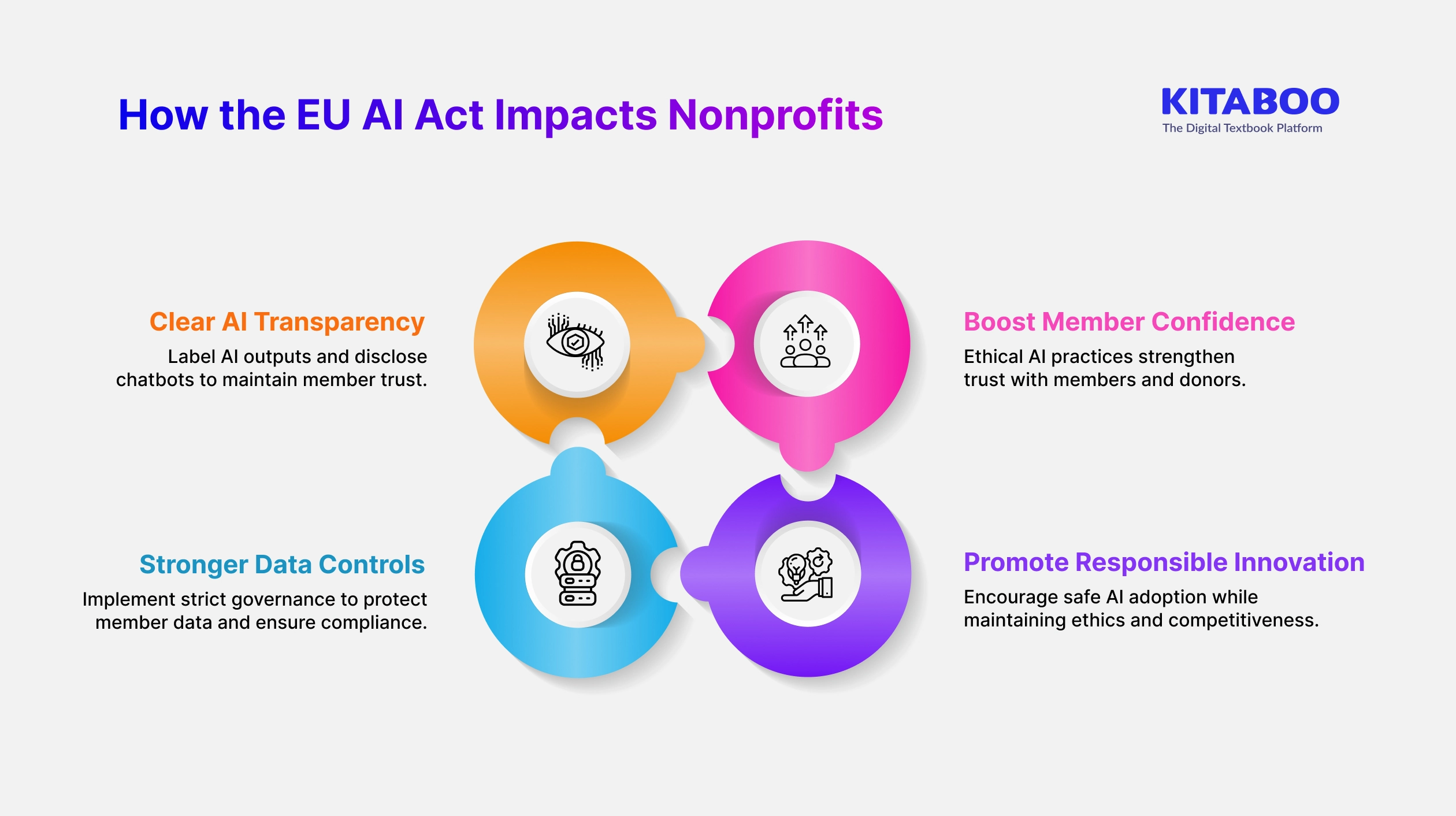

How Will the Act Change Things for Nonprofits & Associations?

The EU AI Act emphasizes ethical practices, transparency, and accountability while managing member data. Understanding the implications for associations handling EU member data is critical to navigate these changes.

Organizations that adopt the law proactively can enhance trust and operational efficiency.

1. Transparency for Limited-Risk AI

The Act requires organizations to clearly label AI outputs and disclose chatbot use. Members should always know when they are interacting with AI. This transparency helps manage expectations and ensures trust in automated systems.

2. Stronger Data Governance & Accountability

Nonprofits must implement stricter controls over how AI systems handle member data. Proper governance ensures compliance and reduces risks of errors or misuse. Associations gain confidence in AI use while safeguarding sensitive information.

3. Higher Member Trust through Ethical AI Use

Ethical and responsible AI practices strengthen relationships with members and donors. Trust grows when organizations demonstrate fairness, accountability, and transparency in AI operations.

4. Encouragement to Adopt Responsible Innovation

The EU AI Act motivates nonprofits to explore AI responsibly while minimizing risks. Organizations can innovate safely without compromising ethical standards or member trust. Responsible AI adoption ensures associations stay competitive and relevant.

What are the Implications for Associations Handling EU Member Data?

Associations routinely manage sensitive member information, including registrations, payments, communications, and training records. AI systems processing this data must comply with EU AI Act standards.

Understanding the implications for associations handling EU member data is critical to reduce risks and leverage benefits responsibly.

1. Location Matters

The EU AI Act applies to any AI system used in the EU, even if your headquarters are outside Europe. For example, an association based in the US with EU members must still comply with the law.

If your organization serves EU members, these rules apply, regardless of office location.

2. High-Risk AI Has Strict Rules

High-risk AI systems include eligibility screening, healthcare decisions, or educational assessments. Organizations must perform risk assessments, maintain human oversight, and follow strict governance procedures.

Compliance ensures member protection and reduces organizational liability.

3. Transparency for Limited-Risk AI

Limited-risk AI includes chatbots, content generators, and personalization engines. The main requirement is transparency.

You must disclose AI use and label AI-generated outputs clearly. This approach builds trust while allowing nonprofits to leverage AI safely.

4. Key Risks

AI can be used incorrectly, harming members or producing unintended outcomes. AI algorithms may favor certain groups, resulting in unfair or discriminatory decisions.

Members may not know AI is involved, eroding trust and credibility.

5. Key Benefits

Transparent and ethical AI strengthens relationships with members and donors. AI can deliver tailored experiences, improving engagement and learning outcomes.

Using AI responsibly helps associations and nonprofits engage members efficiently while maintaining credibility.

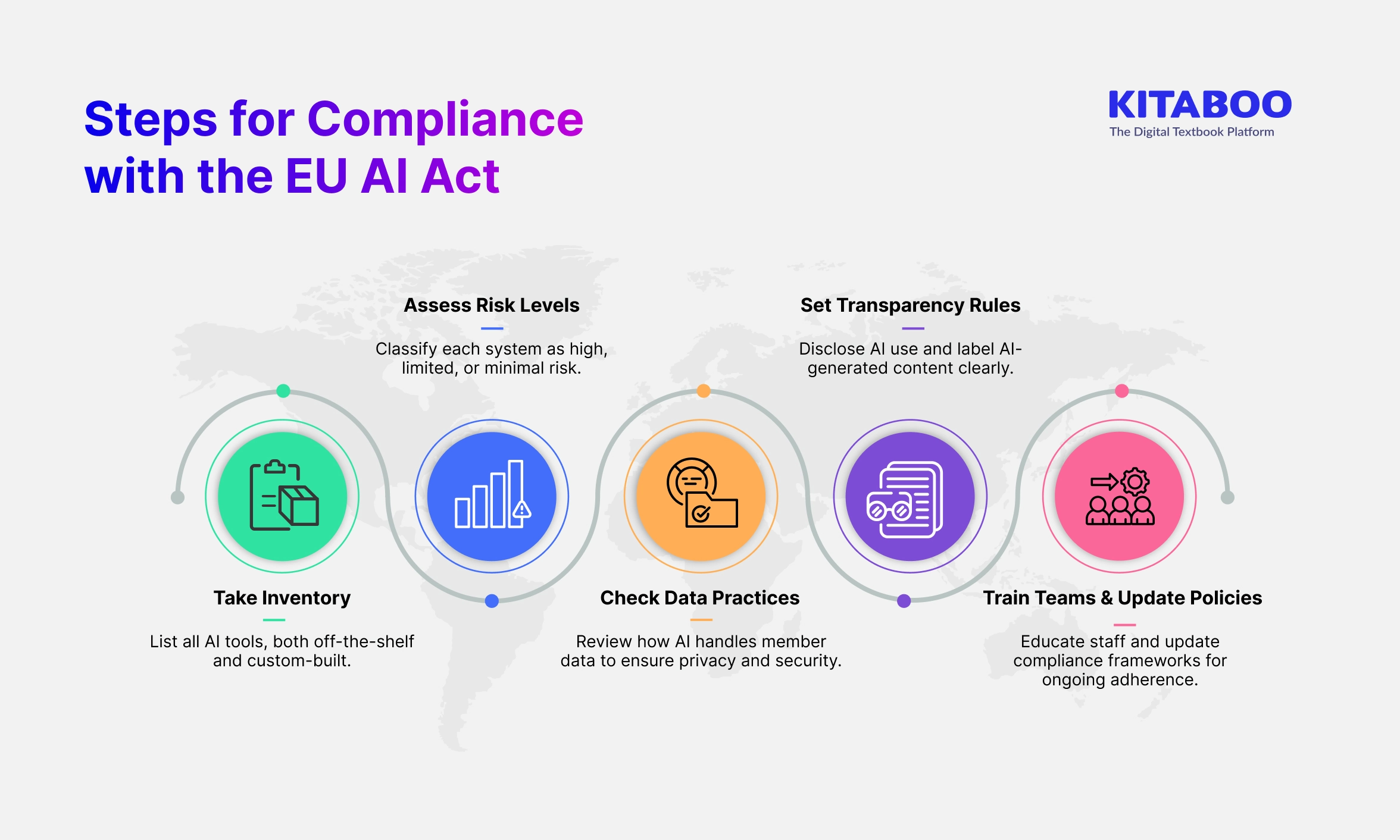

How Can Associations Prepare for Compliance?

The first step to comply with the EU AI Act is conducting an AI audit. Understanding the implications for associations handling EU member data starts with knowing which systems are in use.

A proper audit ensures risks are identified and mitigated before AI deployment expands.

1. Inventory All AI Systems

List all AI tools your organization uses, including off-the-shelf and custom-built solutions. This provides a complete picture of technology affecting member data.

2. Classify Each by Risk Level

Determine whether each system is high, limited, or minimal-risk. Classification helps define required governance and transparency measures.

3. Review Data Governance Practices

Assess how each AI system manages member data. Ensure privacy, security, and compliance standards are consistently applied.

4. Implement Transparency Policies

For limited-risk AI, disclose AI usage and clearly label AI-generated content. Transparency builds member trust and ensures regulatory compliance.

5. Train Staff & Update Compliance Frameworks

Educate your team on AI policies, ethical practices, and governance processes. Updating internal compliance frameworks ensures ongoing adherence to the law.

Why Is the EU AI Act an Opportunity, Not Just a Burden?

The EU AI Act may seem daunting, but it aligns perfectly with nonprofit values. Associations can leverage compliance to strengthen trust, ethics, and fairness in their operations.

Understanding the implications for associations handling EU member data helps turn regulation into a strategic advantage.

1. Aligns with Nonprofit Values

Following the Act reinforces your commitment to transparency, ethics, and fairness. These principles resonate strongly with members and donors.

2. Position as Ethical AI Leaders

By adopting AI responsibly, associations can showcase leadership in ethical technology use. This enhances credibility across the nonprofit sector.

3. Stronger Member and Donor Confidence

Members and donors trust organizations that handle data responsibly and use AI transparently. Confidence increases engagement and long-term support.

4. Long-Term Benefits Outweigh Compliance Effort

While initial compliance requires effort, the benefits of trust, efficiency, and innovation pay off over time. Proactive action positions associations for sustainable success.

What Role Do Digital Publishing Platforms Play in Compliance?

Digital platforms like KITABOO can help associations comply with the EU AI Act efficiently.

Secure platforms with Digital Rights Management ensure data safety for all members. WCAG-compliant features guarantee accessibility for every member. Integration with CRM systems reduces fragmented experiences and streamlines member management.

Platforms like KITABOO also enable controlled distribution of AI-powered content. You can track member engagement for more accountability. Customizable reporting and analytics make it easier to demonstrate compliance to regulators and stakeholders.

These platforms help associations scale operations while meeting governance, accessibility, and compliance requirements.

Navigating the implications for associations handling EU member data becomes easier when using reliable digital tools. By leveraging such platforms, nonprofits can manage AI, content, and data responsibly.

Conclusion

The EU AI Act is not a ban but a framework for responsible AI use. For associations handling EU member data, compliance is both a responsibility and an opportunity.

Proactively addressing the implications for associations handling EU member data is the smart way ahead. It will build trust, transparency, and ethical AI practices across your operations.

To make compliance easier, associations can leverage secure, accessible platforms like KITABOO. This platform helps you manage content, ensure data protection, and maintain accessibility standards effortlessly.

Take the first step today. Request a demo to simplify compliance and boost member engagement.

FAQs

The EU AI Act is a risk-based law that regulates AI in the EU. It ensures ethical, transparent, and accountable AI use.

It applies to any organization using AI in the EU, including associations handling EU member data.

There are four categories: unacceptable, high, limited, and minimal risk, each with increasing compliance requirements.

High-risk AI, such as eligibility screening or healthcare decisions, requires strict oversight, risk assessments, and governance.

Limited-risk AI, like chatbots or content generators, must be transparent. Members should know when AI is used.

It protects member data, promotes fairness, prevents misuse, and ensures ethical AI adoption.

Start with an AI audit: inventory systems, classify by risk, review data practices, implement transparency, and train staff.

Discover how a mobile-first training platform can help your organization.

KITABOO is a cloud-based platform to create, deliver & track mobile-first interactive training content.